If you’ve ever wondered why running a Bitcoin node requires beefy hardware, you’re not alone. The challenge boils down to something called the UTXO set, essentially Bitcoin’s address book of who can spend what. As of early 2025, this set has ballooned to over 11GB containing 179 million outputs. Managing it efficiently is crucial for keeping the Bitcoin network decentralized and accessible.

I’ve been working on a machine learning solution that reduces disk accesses by nearly 19% during block validation, potentially lowering the hardware requirements for running a Bitcoin node.

The Problem: A Growing Database

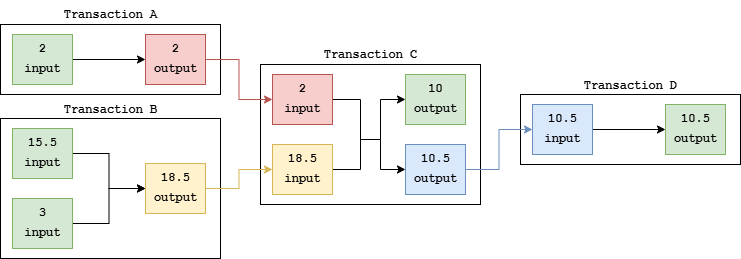

Think of Bitcoin transactions like a chain of checks. Each transaction spends outputs from previous transactions and creates new ones. The UTXO (Unspent Transaction Output) set tracks all these unspent outputs. It’s the single source of truth Bitcoin Core needs to validate new transactions.

The challenge? Most Bitcoin nodes store this massive set on disk, using only a small in-memory cache (450MB by default) to speed things up. This cache currently offers only about a 10% improvement in validation time because it functions primarily as a write buffer for new transactions, not as a smart predictor of what you’ll need next.

The Solution: Predicting the Future

We asked a question: What if we could predict which UTXOs are about to be spent and keep those in memory instead?

We built a machine learning model using a Decision Tree Regressor that analyzes eight key features of each UTXO:

- How long ago it was created

- Its position in the transaction

- The amount stored

- The script type

- Whether it came from mining rewards

The most influential factor turned out to be how long the UTXO has existed, followed by its index position and value. This makes intuitive sense. Older UTXOs sitting idle are less likely to be spent soon than recently created ones.

Simulation Performance

Our model achieves impressive accuracy, with a mean absolute error of just 999 blocks (approximately 7 days). While that might sound imprecise, remember that Bitcoin has been running for over 15 years and has processed hundreds of thousands of blocks.

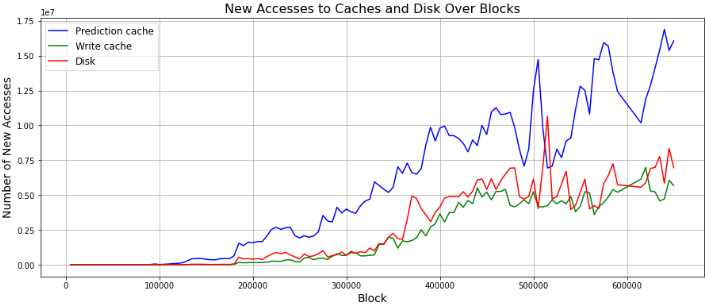

To test our approach, we created a dual-cache system:

- Prediction cache (200MB): Holds UTXOs the model predicts will be spent soon

- Write cache (250MB): Retains the traditional write buffer functionality

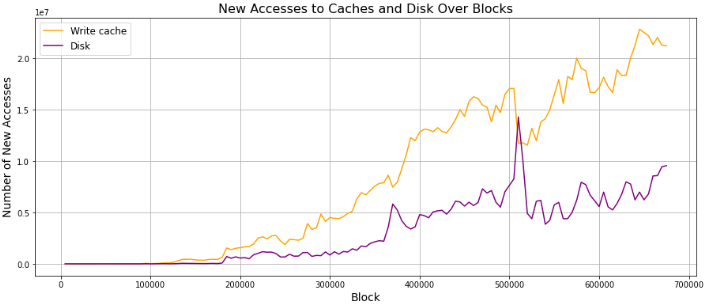

We split the default 450MB allocation between these two caches and ran simulations through 600,000 blocks of actual Bitcoin history.

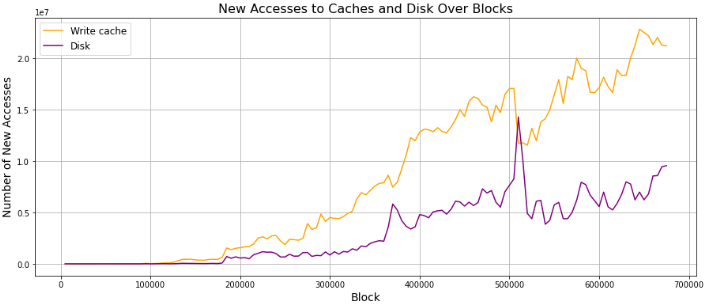

Default scenario:

Dual cache scenario:

The Results Speak for Themselves

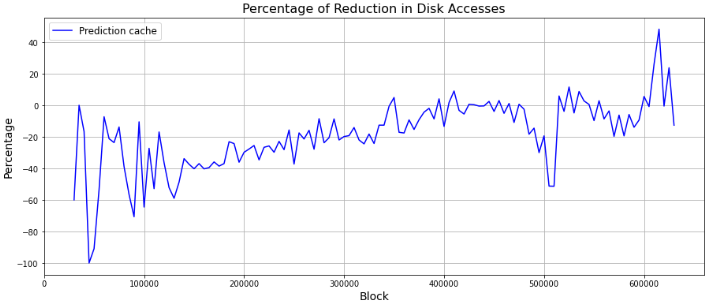

Our dual-cache approach reduced disk accesses by an average of 18.62% compared to the default configuration. While the graph might make this look modest, we’re talking about millions of disk operations, each one adding precious milliseconds to validation time.

The beauty of this approach is that it adapts over time. Every 10,000 blocks (roughly 70 days), the system retrains the model on recent transaction patterns, ensuring it stays current with evolving Bitcoin usage.

Why This Matters

Reducing validation time and hardware requirements isn’t just about convenience. It’s about decentralization. The easier and cheaper it is to run a full Bitcoin node, the more people can participate in validating the network. More nodes mean:

- Greater network resilience

- Improved transaction verification speed

- Lower barriers to entry for new participants

Looking Ahead

I acknowledge that this is just the beginning. Future work includes deploying the system in actual Bitcoin Core implementations and refining the model further. We also need to balance the added complexity of machine learning integration with Bitcoin Core’s maintenance requirements.

What’s particularly exciting is how this approach could extend beyond Bitcoin. Any UTXO-based blockchain could benefit from predictive caching, potentially improving performance across numerous cryptocurrency networks.

The Bottom Line

By applying machine learning to a fundamental blockchain challenge, I’ve shown that even mature systems like Bitcoin still have room for optimization. The 18.62% reduction in disk accesses might not sound revolutionary, but in a network processing billions of dollars in daily transactions, every percentage point matters.

As Bitcoin continues to grow and evolve, innovations like predictive caching could be key to keeping the network accessible, efficient, and truly decentralized for years to come.

This research was presented at the 2025 International Conference on Blockchain Computing and Applications and was partially supported by Spanish government grants for securing cyber networks.